Emergence and the Rise of the Mobile Applications

The introduction of the technology has been enabling the delivery of traditional services in new dimension and new ways. For example in the banking sector the ATMs came into existence in 1970’s, followed by Internet Banking in the 1990’s and post 2010 banking has found the new platform i.e. the mobile device aka smart phone.

The mobile application world has transformed from a fun and cool possession of the young and the enthusiastic to being a business critical need of the techie business personnel. It is still seen as a Value Added Service but likely to become the defacto medium of delivery of almost all the services and products in near future.

While you are reading this you might have already made your fund transfers through a mobile device or might be planning to possess the capacity to do your business on the move without having to wait for your laptop to boot or while you are on a vacation where you do not want to carry the epitome of work i.e. your laptop with you. User empowerment has increased exponentially with the anytime anywhere capacity being passed on to the end user while on the go.

As per the estimates of the industry experts only the enterprise mobility business is supposed to be close to 180 billion by 2017. The spending aims to optimize the business cycles by pushing them to real time 24X7 capacities without the restrictions of location and specific network dependencies.

Managing the Enterprise Mobility Space

Enterprise mobility has made clear space for itself in the IT business world and new concepts and principles are being laid to imbibe enterprise mobility in business. These new concepts and principles are needed to ensure that the mobility growth is efficient, effective and in line with the existing values and propositions of the business. Some of the new terms that have cropped up with the advent of enterprise mobility are as follows:

MDM (Mobile Device Management):

A software suite used by companies to control, encrypt, and enforce policies on mobile devices such as tablets and smart phones of their users.

MAM (Mobile Application Management):

A software suite used by companies to control, encrypt, and enforce policies on the applications installed on the mobile devices such as tablets and smart phones of their users.

MIM (Mobile Information Management):

A software suite used by companies to control, encrypt, and enforce policies on the data to be synced to the mobile devices such as tablets and smart phones of their users.

BYOD (Bring Your Own Device):

Is a concept where the end user can bring their own device and hook it to the corporate resources, such as network, machines and applications. MDM, MAM, and MIM are the suggested enablers for implementing BYOD; however it is not necessary to use them together.

BYOA (Bring Your Own Application):

Is a concept that promotes the use of third party applications and cloud services in the workplace. Security, Compliance and Regulatory concerns are the primary risk areas which are diminished using an appropriate combination of MDM, MAM, and MIM.

The above guiding principles help regulate the enterprise mobility space to be assimilated into the existing constraints required for business functions. These bring opportunities and risks along with them and depending on the need, vision, and type of business they are partially or fully being adapted in the enterprise world.

Enterprise Mobile Application Development and Testing Challenges

Ever Evolving Mobile Technologies

Even though it has been some time since the arrival of the mobile technologies but they are far from being standardized. Every other day we hear some new announcement and new releases coming across in one platform or the other. The competitive battle for bringing out the best and before anybody else has even reached courtrooms in an effort to establish dominance in the market. The rush to push the latest technology across to the end user has resulted in never before empowerment and benefits of the end user and in the process the business houses have benefited as well.

Shorter Go to Market Time with Latest Features

The rush of evolving technologies has always kept the development and testing groups on their toes. The dual pressure of optimizing the newly established delivery process and including the latest feature and functionality in the application keeps the team busy with increased development/testing and re-development/re-testing efforts. The pressure of reducing the time to market is more than in any other stream of technology.

For non enterprise mobile applications where the majority of functions are not dependent on the back office operations the delivery cycles are as short as couple of months. But the bigger challenge is for enterprise applications to put the core enterprise functionality developed over years to be put in the plate of the user on a mobile device in a relatively short cycle and with relatively small teams.

Increasing Mobile Platform Base

In the recent past the mobile technology was only for the top brass of the management and with limited platform players in the market. The organizations had to primarily choose from only two players i.e. the APPLES and the BALACKBERRIES of the world. Now Android and WindowsMobile have already eaten considerable market share of the prior leaders even though it looks that they have just started to expand their base. With the dilution of user base to the lower management and opening up of the consumer application market the platform and device base has risen exponentially.

From supporting few devices in one of the platforms now the enterprise applications have to support all premier platforms and many more primary devices in each platform. The premier fighters in the platform market are Apple, Blackberry, Android and WindowsMobile. Each platform player is acquiring (Google eating Motorola) and tying (Samsung flirting on WindowsMobile) with OEMs (Original Equipment Manufacturers) to dish out more and more devices in the market. Both the consumer and the enterprise application market are under constant pressure to support all or most of the new released devices. The product team and the delivery teams are always in a quandary as to what platforms and how many devices to support.

Delivery teams are looking towards alternatives, such as MEAPs( Mobile Enterprise Application Platforms) to counter the challenges of catering to an increasing mobile platform base and to keep the cost in control and to reduce the time to market. MEAPs tend to offset the challenges posed by huge development/maintenance/upgrade effort required in native platform environments, by using the “Write Once and Deploy Everywhere” paradigm. MEAPs abstract the native platform implementation complexities enabling the development team to focus on the business functionalities.

However, the picture in the MEAP world is not as rosy as it looks. It has its own constraints and dependencies due to which the team may not be able to utilize the full potential offered by the native platforms. It’s a tradeoff between effort required to port to new platforms and having full control over platform features.

Integrating with Mainstream Enterprise

For availability of enterprise applications on mobile devices a huge integration effort is required. Enterprise applications, such as CRM, ERP, and billing require integration with the mobile application to enable the end-user access them from mobile devices. The integration effort needs to make sure the access to the related components, such as database, authentication, and data integrity are through a secure and well defined interface.

Without proper and thorough testing the application deployments are likely to fail to meet the user requirements and may cause project failure despite the application working perfectly without integration. It is a huge challenge to create test simulators for testing mobile application if the interface for interacting with the back-office/enterprise application is not well defined.

Addressing Security Concerns

Security is the biggest deterrence to widespread adaptation of enterprise mobile applications among the masses. It is a huge risk to put business critical and confidential data to be accessed via enterprise mobile applications. Several new techniques, such as MDM (mobile device management), MAM (mobile application management), and MIM (mobile information management) are being put in place to put greater control over the mobile echo-system for enhanced security. Several other security threats loom over the enterprise mobile applications, such as hacking via platform/network loopholes, loss of mobile devices, and mobile malwares. As per a recent study mobile malwares are doubled every year. Few examples are applications collecting personal information, such as passwords and browsing history, and sending SMS or making calls to paid numbers.

Mobile security threats can be countered by following few best practices, such as:

o Confirming PCI DSS compliance and SOX

o Avoiding client-side data storage to maximum possible extent

o Enable data protection mechanism in data transit

o Strong authentication mechanism for accessing resources

o Implement least privilege authorization policy

o Protection against client side injection and denial of service

o Protection from third-party malicious code using MAM

o Implementing strong server side controls

Lack of End-to-End Testing/Verification Frameworks:

Over the years competing technologies and tools have been developed and stabilized to meet the testing and verification needs of various enterprise applications. However due to quick emergence of mobile echo-system from diverse platforms and diverse equipment manufacturers there has been no standard tool/framework to cater to the diverse testing needs of the enterprise mobile applications.

End-to-end verification of enterprise mobile applications requires an in-depth understanding of the enterprise operations and expertise in mobile technologies. It is not possible to test/verify the entire spectrum of the enterprise mobile applications using one tool. A well designed automation strategy is imperative for supporting the manual testing efforts of certifying the application on a broad device and platform base. A combination of testing tools/frameworks available for enterprise and mobile application testing needs to be used.

Testing/Verification Approaches

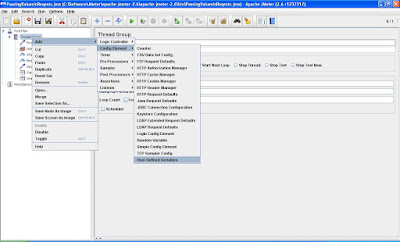

There are several tools available that can be used to the bridge the testing requirements of the enterprise mobile spectrum. The testing can be done locally on the desktop using emulators, or physical devices or it can be done on a remotely using a remote cloud service. Remote cloud services, such as DeviceAnywhere and PerfoctoMobile help enable get access to device labs and the local network on which the application is to be deployed. Scenarios where network testing is required these tools are very helpful. However, local testing should be the preferred method of testing for full functional regression of the mobile application. For local testing the tools can be broadly categorized on the basis of their approach to verify mobile applications. The tools available for mobile application testing can be classified as:

o Native object recognition based: Object recognition tools identify the elements of the application as objects, such as Text Box, Labels, Buttons etc and their properties, such as disabled, enabled, hidden etc.

o Image/Co-ordinate recognition based: Image based tools identify application components as images and try to locate them using relative or absolute co-ordinates.

Advantages of Image based automation tools

Majority of the tools available in the market are image based tools because of some advantages, such as:

o Image based tools are application technology independent

o Image based tools are compatible with all the mobile platforms

o Easy to learn and implement

o Script re-usability across platforms if the UI component are locked

Disadvantages of Image based automation tools

Even though majority of tools are image based, the problem in UI based automation approach itself may not be a very good idea if deep, invasive, and maintainable functional tests are required.

o Changes in the UI layouts severely impact test scripts making them obsolete

o Relative lack of dependency of test results even with minor changes in UI

o Limited verification of application components

o High long term maintenance cost

List of testing/automation tools

Following is a list of major testing tools for mobile application testing:

o Device Anywhere (All major platforms)

o Perfecto Mobile (All major platforms)

o Eggplant (IOS, Android, BlackBerry)

o SilkMobile (IOS, Android, BlackBerry)

o JamoSolutions (IOS, Android, BlackBerry, WindowsMobile)

o Test Quest (Android, BlackBerry, Symbian, WindowsMobile)

o UIAutomation Framework (IOS)

o Robotium(Android)

o FoneMonkey (Android, IOS)

o MonkeyRunner(Android)

o Monkey (Android)

o SeeTestStudio (IOS, Android, BlackBerry, WindowsMobile, Symbian)

The list is ever growing and there might be special tools for special cases. Please add to the list if you wish to suggest.